LLMs in Prod Comes to Bangalore

Portkey's LLMs in Prod series hit Bangalore, bringing together AI practitioners to tackle real-world challenges in productionizing AI apps. From AI gateways to agentic workflows to DSPy at scale, here's what's shaping the future of AI in production.

LLMs in Prod, our global series of events for AI practitioners, by practitioners, made its Bangalore debut last month. Following meetups in San Francisco (with Databricks, Llamaindex, and Lightspeed), and New York (alongside Flybridge, LastMile, Noetica, and Nvidia), we partnered with Postman to bring the AI practitioner community together in Bangalore.

The response was strong, with over 500 registrations in just four days! AI researchers, engineers, leaders, founders, and PMs shared insights on building and productionizing AI apps.

The event featured 3 anchor talks, and a lot of conversations in between!

AI Gateways: Streamlining LLM Orchestration

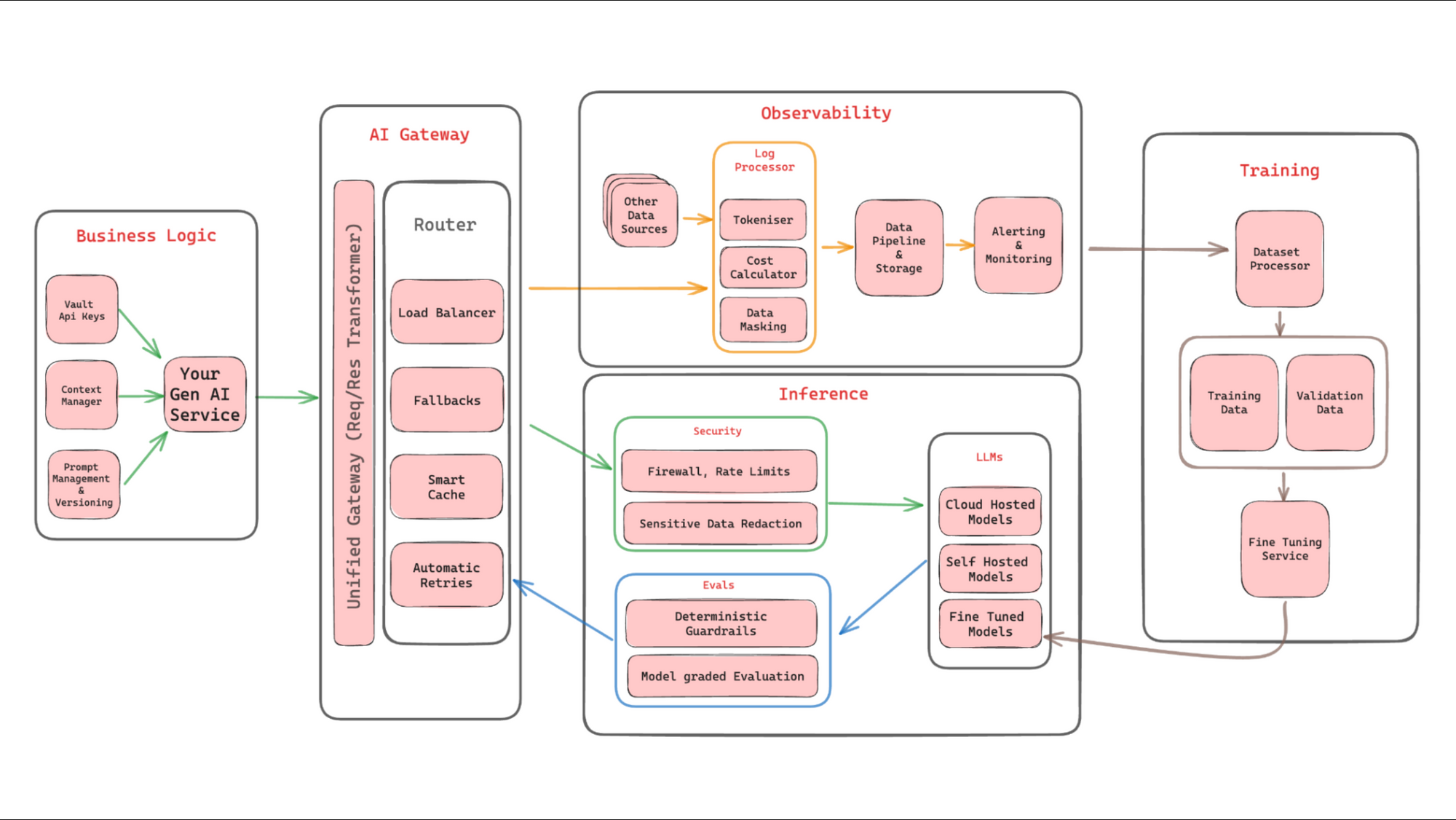

Rohit Agarwal, Co-founder of Portkey, introduced the AI Gateway Pattern. This architectural approach addresses critical challenges in deploying AI at scale, from handling failures & rate limiting, to upgrading models, to access management, and more. The AI Gateway acts as a central nervous system for AI operations, providing unified APIs, routing, security, and robust observability.

"The AI Gateway Pattern is to GenAI what DevOps was to cloud adoption," Agarwal explained, highlighting its potential to streamline AI ops and boost confidence in launching AI features.

Key Lessons

- The AI Gateway Pattern is crucial for enabling enterprise-ready products on Gen AI.

- It addresses common challenges in production AI systems such as handling failures & rate limiting, understanding cost metrics, integrating model upgrades, and more.

- The pattern is inspired by API gateways and the microservices architecture.

Postbot: Lessons from the Trenches

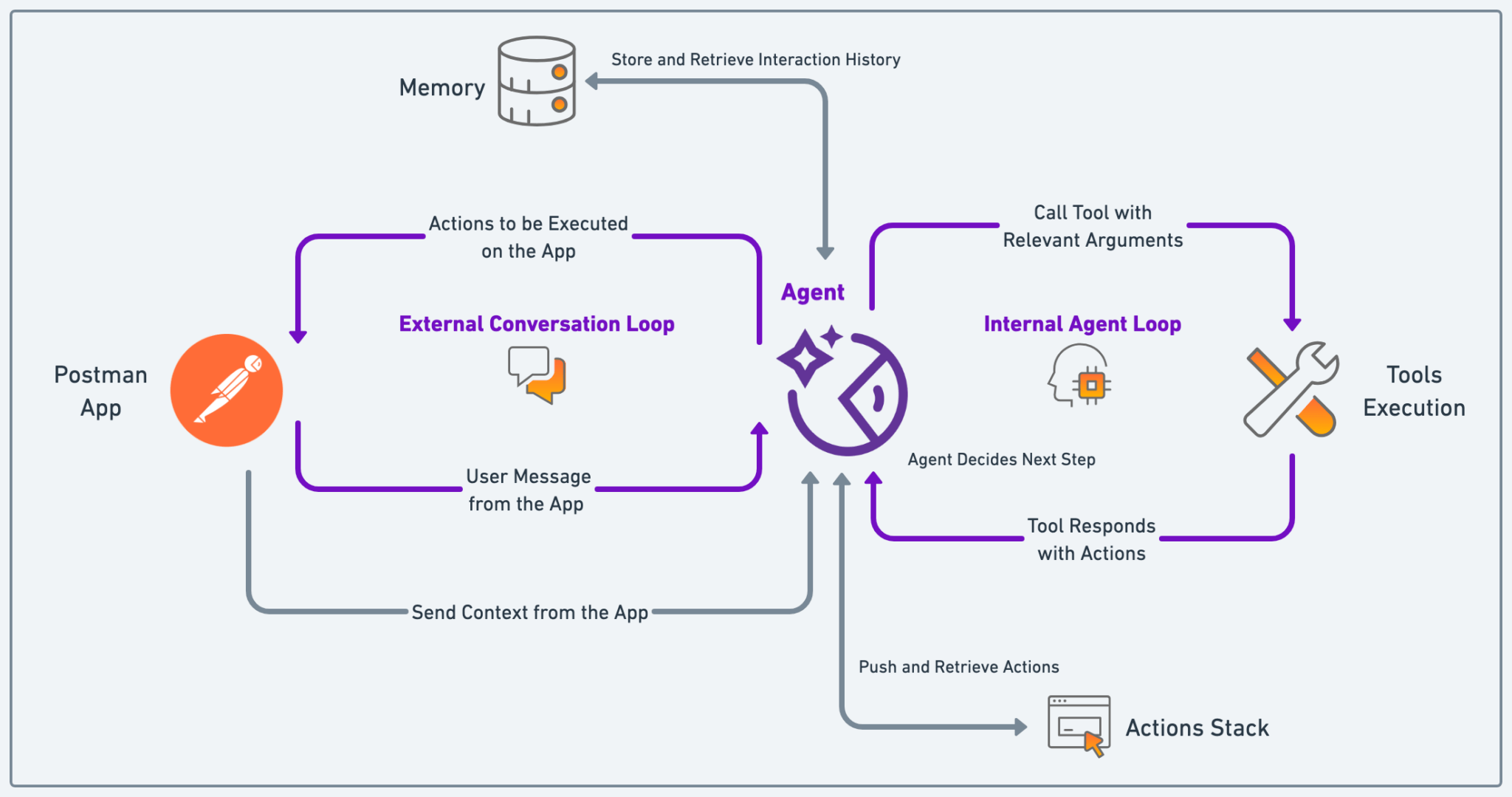

Rajaswa Patil and Nitish Mehrotra from Postman's Applied AI team shared their journey of building Postbot, Postman's AI assistant. Postbot essentially transformed from a set of disconnected AI features to a sophisticated, conversational agent capable of complex, multi-step tasks.

"We needed Postbot to feel like one expert AI assistant, not just a collection of smart features," Patil explained.

The team achieved this through a custom agent architecture that balances autonomy with reliability. This approach allows for controlled agency, fine-grained execution, and easy extensibility - crucial for deploying AI assistants at scale.

Key Points

- Postbot's architecture evolved from a simple routing framework to a complex agent system with external conversation and internal agent loops.

- There are 3 key components of the system: 1) User Intent Classifier: Uses a deterministic neural text classification model. 2) Skills/Agent Tools: Leverage GenAI models to generate content for specific user intents. 3) Actions: Codified set of in-app effects that Postbot can execute.

- Similarly, there are 3 levels of agent architecture in Postbot: 1) Root Agent: Manages conversations and understands user intent and system states. 2) Agent Tools: Generate content or make requests to third-party APIs. 3) Custom Orchestration: Controls agent behavior and enables multi-action execution

Scaling DSPy: From Concept to Production

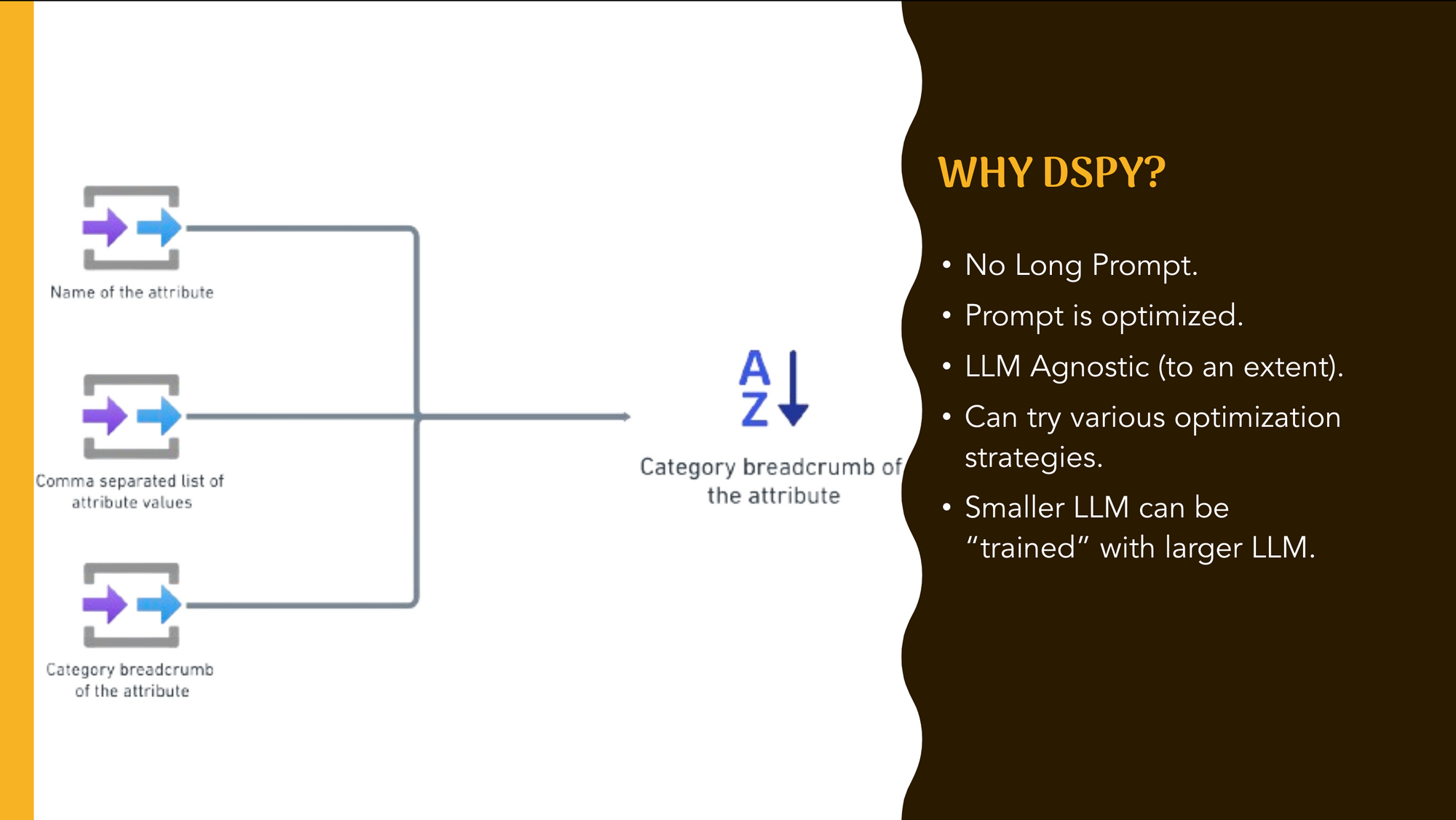

Ganaraj Permunda, Lead Architect at Zoro, shared his experiences scaling DSPy in an e-commerce environment. He demonstrated how DSPy solved complex challenges like normalizing product attributes across millions of items from hundreds of suppliers.

"DSPy revolutionized our approach to LLM integration," Permunda explained. It's LLM-agnostic nature allows you to easily switch between models like Mistral and Llama, optimizing for both performance and cost. Curiously, metric definition is very important in DSPy — it is the hardest part, but also where the magic happens.

Key Points

- There are 8 steps to building a DSPy app: Define task, Decide pipeline, Prepare examples, Define data, Create metrics, Zero-shot evaluation, Compile with optimizer, and Iterate. (more here)

- There are 3 major DSPy Evaluation Methods: 1) Zero-shot: Basic prompt without optimization. 2) Few-shot: Providing 3-4 examples in the prompt. 3) MyPro: DSPy optimizes both the number of examples and the prompt.

- Benefits of DSPy in Production: 1) Eliminates need for manual prompt writing and optimization. 2) LLM-agnostic: Easy to switch between different models (e.g., from Mistral to Llama). 3) Allows tuning smaller LLMs with larger ones as trainers.

- Metric definition is crucial and can range from simple string matching to complex LLM-based evaluations.

The Road Ahead for LLMs in Production

As we reflect on the insights shared at LLMs in Prod Bangalore, several key themes emerge that are shaping the future of AI in production environments:

- Middleware and Orchestration: Rohit's talk on the AI Gateway Pattern highlighted the growing importance of middleware in LLM deployments. As organizations scale their AI operations, tools that can manage routing, observability, and security across multiple models, while handling production load, will become essential. We can expect to see more innovation in this space, with AI-specific orchestration layers becoming a standard part of the AI ops stack.

- Evolving Architectures for AI Assistants: The journey of Postbot, as detailed by Rajaswa and Nitish, showcases the rapid evolution of AI assistant architectures. The shift from simple intent classification to more complex agent-based systems with custom orchestration layers points to a future where AI assistants will become increasingly sophisticated and context-aware.

- Democratization of LLM Prompting & Fine-tuning: Ganaraj demonstrates how tools like DSPy are making it easier for companies to prompt, fine-tune and optimize LLMs for very specific use cases. This democratization of LLM customization is likely to accelerate, enabling more businesses to leverage AI for domain-specific tasks without needing to do "magic prompting" or model training.

- LLM-Agnostic Approaches: A common thread across all presentations was the need for flexibility in model selection. Tools and architectures that allow easy switching between different LLMs (like the AI Gateway and DSPy) are likely to gain more traction. This trend towards LLM-agnosticism will help organizations future-proof their AI investments and take advantage of rapid advancements in model capabilities.

As we look to the future, it's clear that the field of LLMs in production is rapidly evolving. The challenges and solutions shared at this event are just the beginning!

It also speaks to the vibrant worldwide community that's driving these advancements. As we continue to explore and push the boundaries of what's possible with LLMs in production, events like these will remain crucial for sharing knowledge, sparking new ideas, and collectively solving the challenges that lie ahead. As one attendee shared,

Stay connected with this growing community of AI practitioners

Subscribe to our event calendar to know when the next event takes place. Find recordings of the previous events here, and connect with other practitioners on the LLMs in Prod Community here.