LLMs in Prod 2025: Insights from 2 Trillion+ Tokens

Real-world analysis of 2Trillion+ production tokens across 90+ regions on Portkey's AI Gateway. Get the full LLMs in Prod'25 report today.

2024 marked the year when AI moved from experiments to mission-critical systems. But as organizations scaled their implementations, they encountered challenges that few were prepared for.

Through Portkey's AI Gateway, we've had a unique vantage point into how enterprises are building, scaling, and optimizing their AI infrastructure. We’ve worked with 650+ organizations, processing over 2 trillion tokens across 90+ regions. Along the way, everyone kept asking us the same questions:

- Which providers are leading the way?

- How can we ensure reliability in production?

- What patterns are emerging in enterprise AI infrastructure?

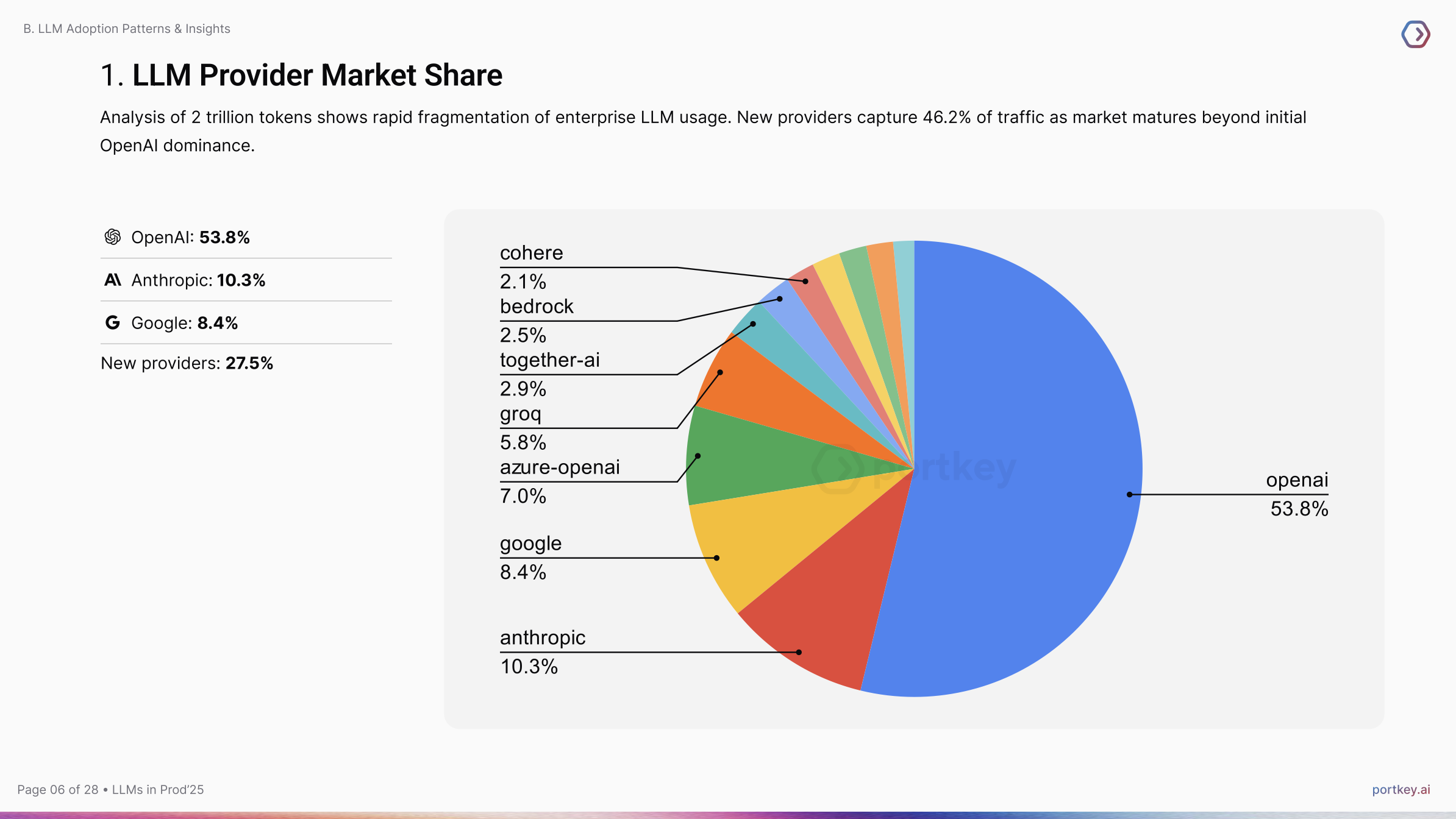

To help answer these questions, our team has analyzed 2 trillion+ tokens processed by our AI gateway in 2024. The result is the LLMs in Prod—a data driven report of how companies are using LLMs in production going forward. Today we are excited to share this with you.

Key Learnings from 2 Trillion+ Tokens

As organizations scaled their AI efforts, some shocking insights stood out. These takeaways highlight the challenges and opportunities in building reliable, scalable AI systems.

With LLMs taking over the world, everyone’s asking the same question: “Which LLM is the most utilized of them all?” Let’s unpack what we’ve seen.

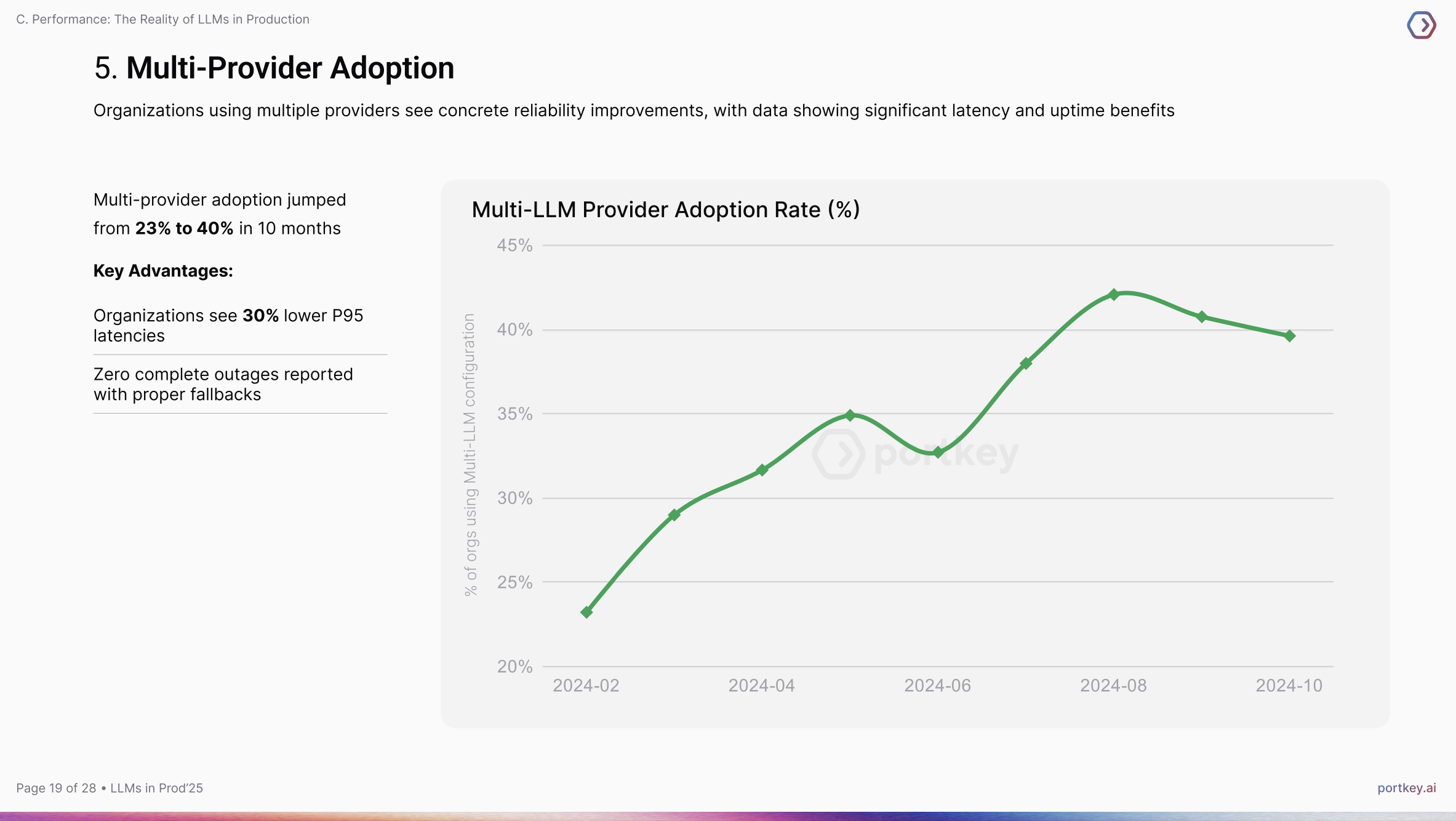

1. Multi-Provider Strategies Are Becoming the Norm

Our data shows a dramatic shift toward multi-provider adoption, driven by the need for redundancy and improved performance. Multi-provider adoption jumped from 23% to 40% in the last year.

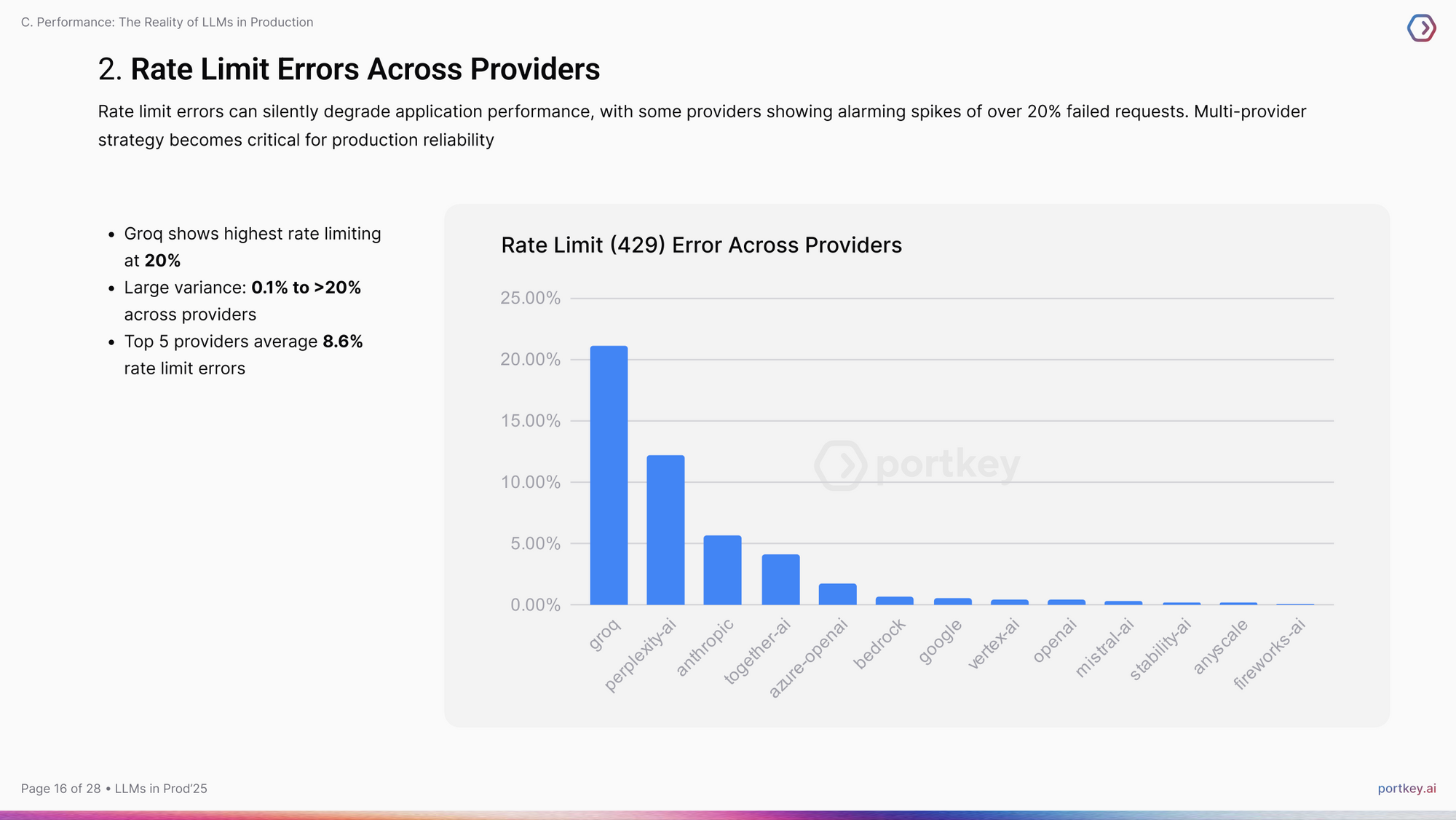

2. Reliability is the New Battleground

As enterprises scale their AI systems, reliability has emerged as a key concern. Our analysis revealed that during peak times, some providers experience failure rates of over 20%.

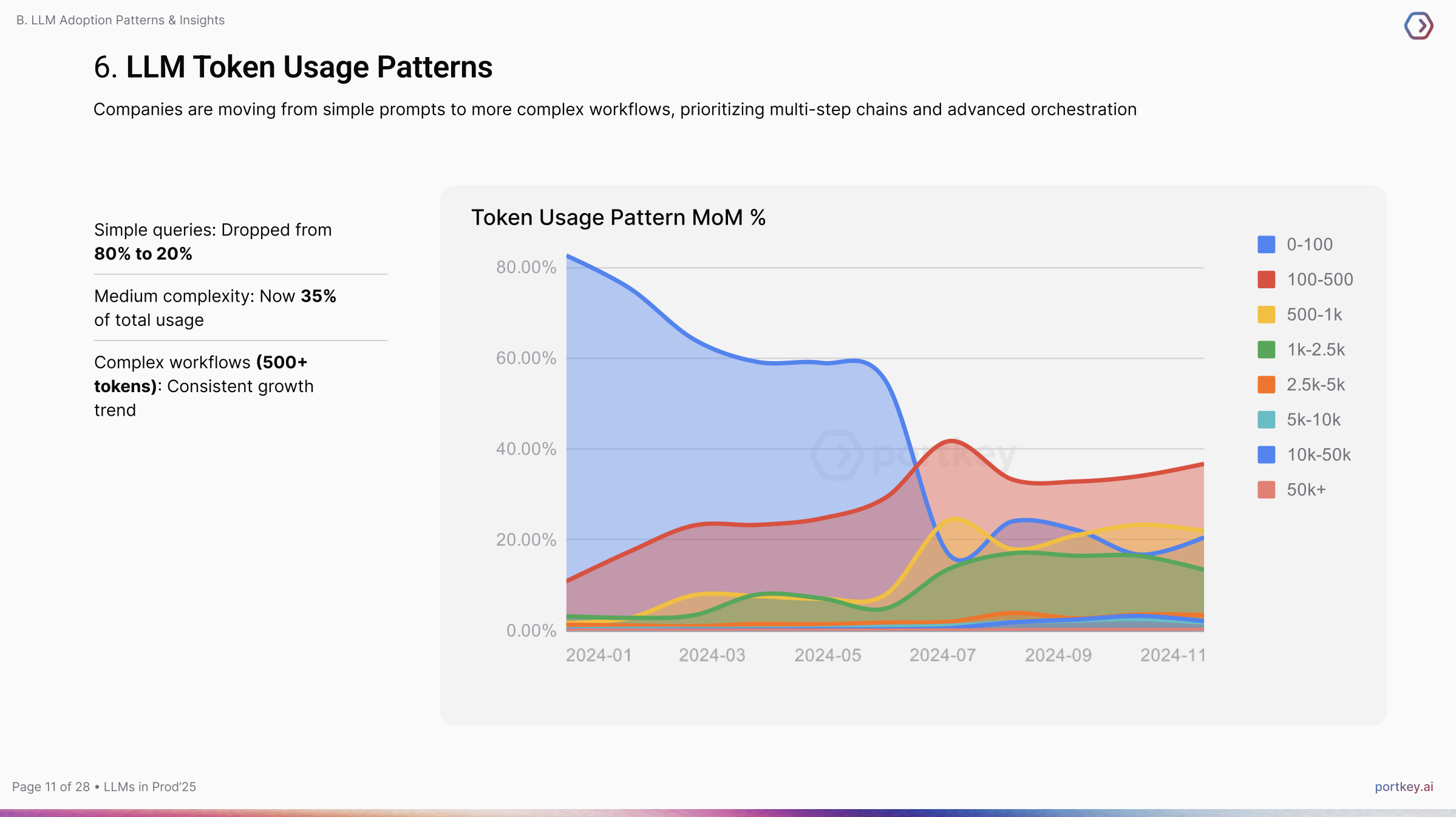

3. Complexity is Scaling with Demand

Enterprises rapidly moved from basic LLM usage (80% simple queries in early 2024) to more sophisticated implementations, with simple queries dropping to 20% by late 2024 as companies adopt complex workflows and multi-step chains that use more tokens per request.

In just a year we saw:

- 100-500 token requests grew from 10% to 37%.

- 500+ token buckets saw consistent, sustained growth.

The Road Ahead

As we look to 2025, it's clear that the focus must shift from basic implementation to building reliable, efficient, and secure AI infrastructure at scale. But the path forward isn't obvious.

In our complete "LLMs in Production 2025" report, we analyzed insights:

- Detailed reliability benchmarks across providers

- Architectural patterns for multi-provider deployments

- Cost optimization frameworks for complex workflows

- LLM Adoption patterns and more...