Making OpenAI's Typescript SDK production-ready with an AI Gateway

For a long time, building agentic applications meant working in Python. That’s where the ecosystem was, that’s where the tools were. But for the millions of developers working in TypeScript every day, this created friction. They had to switch languages, learn the abstractions, or build custom scaffolding just to prototype agents.

That changes with OpenAI Agents SDK TypeScript.

This SDK brings agent-first development into the TypeScript ecosystem. It’s designed around two key principles:

- Minimal but powerful: Just enough features to let you build real-world agents, without overwhelming you with layers of abstraction.

- Works out of the box: You can start running an agent in minutes, while still having the flexibility to customize behaviors as your app scales.

Common production challenges when deploying agents

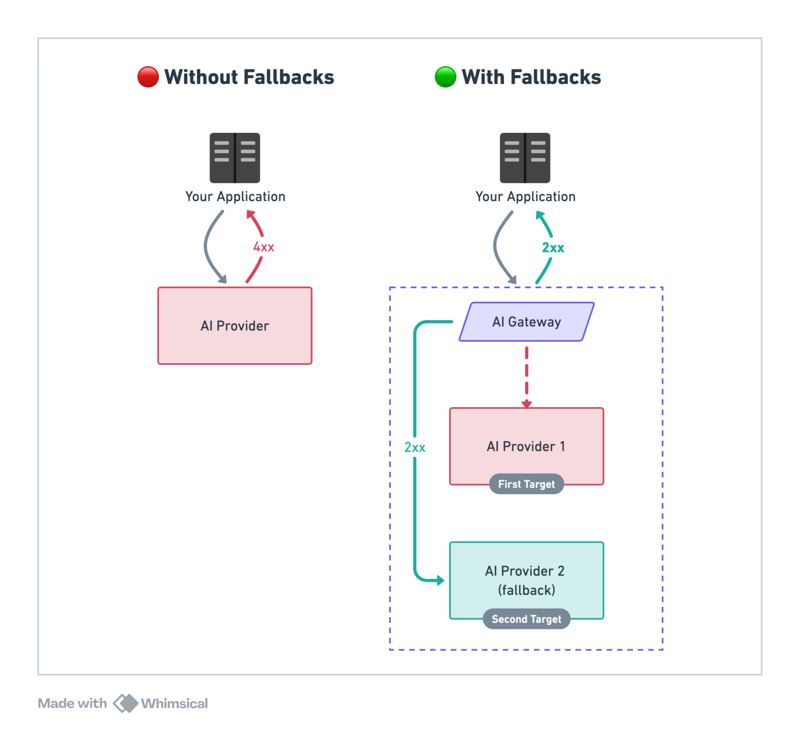

Agents going down in production: One failed model call, a network timeout, or an unhandled exception, and your agent stops working. Without retries, timeouts, or fallback logic, agent reliability becomes fragile, especially under load or during transient API issues.

Debugging takes too long without visibility: In production, agents often involve multiple steps: prompting, tool calls, inference, and more. When something breaks or behaves incorrectly, it's nearly impossible to trace the exact path without structured logs, metrics, or traces that visualize the decision flow and external calls.

No tracking of LLM spend by user, team, or agent: Token usage can become a hidden budget sink. Without visibility, teams have no way to know which agent workflows or tool calls are driving up costs or causing unexpected billing spikes. That means budgeting and forecasting become guesswork.

Overused or misused tools with no access controls: Anyone or any piece of logic can invoke any tool in your system unless you build custom checks. This can lead to unauthorized or inefficient tool usage, potential data leaks, or unmanaged side effects.

Locked into a single model provider: Many applications benefit from using multiple models optimized for different tasks. But switching between OpenAI, Anthropic (Claude), or Bedrock requires rewrites or duplicate logic. This fragility makes it hard to gradually test or adopt better-performing models.

Prompt updates mean redeploys: Prompts are the heart of agent workflows. Yet updating prompts typically means pushing code changes, running CI/CD pipelines, and redeploying. That slows down iteration and limits experimentation to developers only.

Portkey makes your AI agents production-ready

The OpenAI Agents SDK gives developers a lightweight, TypeScript-native way to build and chain agent workflows. But once you’re moving into production, especially in a team or enterprise environment, you need more than code-level abstractions. You need infrastructure. You need control. That’s where Portkey steps in.

Portkey is an AI Gateway built for production-grade LLM applications. It integrates directly with the OpenAI Agents SDK, without changing your code, and fills in the operational gaps that become critical as you scale.

Here’s how Portkey helps your agents go from “it runs” to “it runs reliably, securely, and cost-efficiently”:

Build resilient agents with failovers and provider flexibility

Your OpenAI agent doesn’t have to go down just because one API call failed. Portkey lets you define retries, timeouts, and fallback strategies for every LLM or tool used in your agent loop. So if a model like gpt-4o fails, Portkey can automatically retry with another OpenAI model or even fall back to Claude or Bedrock, without interrupting the flow of the agent.

This resilience is key when your agents are customer-facing or running in high-volume environments.

End-to-end visibility across every call and interaction

With Portkey’s logging and tracing, you get a complete view of how your agents behave in production. See which prompts were sent, what tools were invoked, how long each step took, and how many tokens were used. You can trace issues down to a specific user, session, or input, no more debugging in the dark. Portkey integrates seamlessly with your observability stack or can be used standalone to visualize agent workflows.

Apply safety guardrails across inputs, tools, and responses

Portkey allows you to define and enforce safety checks throughout the agent lifecycle. You can:

- Block or sanitize user inputs before they hit the model

- Restrict tool usage to authorized roles or specific contexts

- Monitor and redact harmful or unwanted outputs from LLMs

All of this is configurable at the gateway layer, no changes required code. Portkey also integrates with top guardrail platforms, Pangea, Mistral, Pillar Security, and many more to run your custom policies seamlessly.

Control retries, enforce timeouts, and avoid single points of failure

Agent loops can get stuck or stall when a model is slow or a tool fails. Portkey gives you configurable retry logic, execution timeouts, and circuit breakers. You define how tolerant your agents should be, and Portkey makes sure those policies are enforced. This means fewer edge-case failures, more consistent agent behavior, and a smoother user experience.

Centralize and manage prompts without redeploys

As your agents evolve, so do your prompts. Portkey lets you manage prompts outside your codebase–store, version, and A/B test them without needing a deploy. This allows product teams, prompt engineers, and ops teams to collaborate on improving agent behavior without slowing down engineering.

Govern usage with team-level policies and spend controls

With Portkey, every request is tagged with metadata like user ID, team, environment, and agent name. That means you can:

- Set rate limits per team or user

- Enforce budget caps to prevent runaway token usage

- Control which models and tools are available in dev, staging, or prod

It brings real governance to AI agent usage, critical for enterprise and multi-team deployments.

Works seamlessly with the OpenAI TypeScript SDK

You don’t have to change your agent code. You just route your agent’s model and tool calls through the Portkey Gateway. That’s it. Everything else, tracing, guardrails, retries, and prompt management, happens automatically. It’s the infrastructure layer that unlocks production-readiness without forcing you to re-architect your workflow.

Ready to take your agents to production?

OpenAI Agents SDK brings a refreshing, TypeScript-native approach to building AI agents, making it easier than ever for frontend and full-stack developers to ship powerful agentic workflows. But while the SDK is excellent for development, production environments demand more: resilience, observability, cost control, governance, and safety.

Whether you’re routing calls across providers, applying guardrails, tracking cost per user, or debugging long agent loops, Portkey gives your team the confidence to run agents in production.

If you’re looking to build agents with OpenAI Agents SDK, take it to production with Portkey. Try it yourself or book a demo with us.