LibreChat vs Open WebUI: Choose the Right ChatGPT UI for Your Organization

Looking to harness AI while keeping your data in-house? Dive into our comprehensive comparison of LibreChat and Open WebUI – two powerful open-source platforms that let you build secure ChatGPT-like systems.

Every organization wants to harness AI's transformative power. But the real challenge isn't accessing AI – it's doing so while maintaining complete control over your data. For healthcare providers handling patient records, financial institutions managing transactions, or companies navigating GDPR, this isn't just a technical preference – it's a business imperative.

While commercial AI platforms offer compelling capabilities, they often leave organizations exposed when it comes to data sovereignty and cost control. This is where LibreChat and Open WebUI come in – two open-source platforms that have emerged as the go-to solutions for building internal ChatGPT-like systems.

After working with 100s of organizations using these tools to leverage AI, I'll help you navigate between OpenWebUI and LibreChat based on what matters most to your workflow.

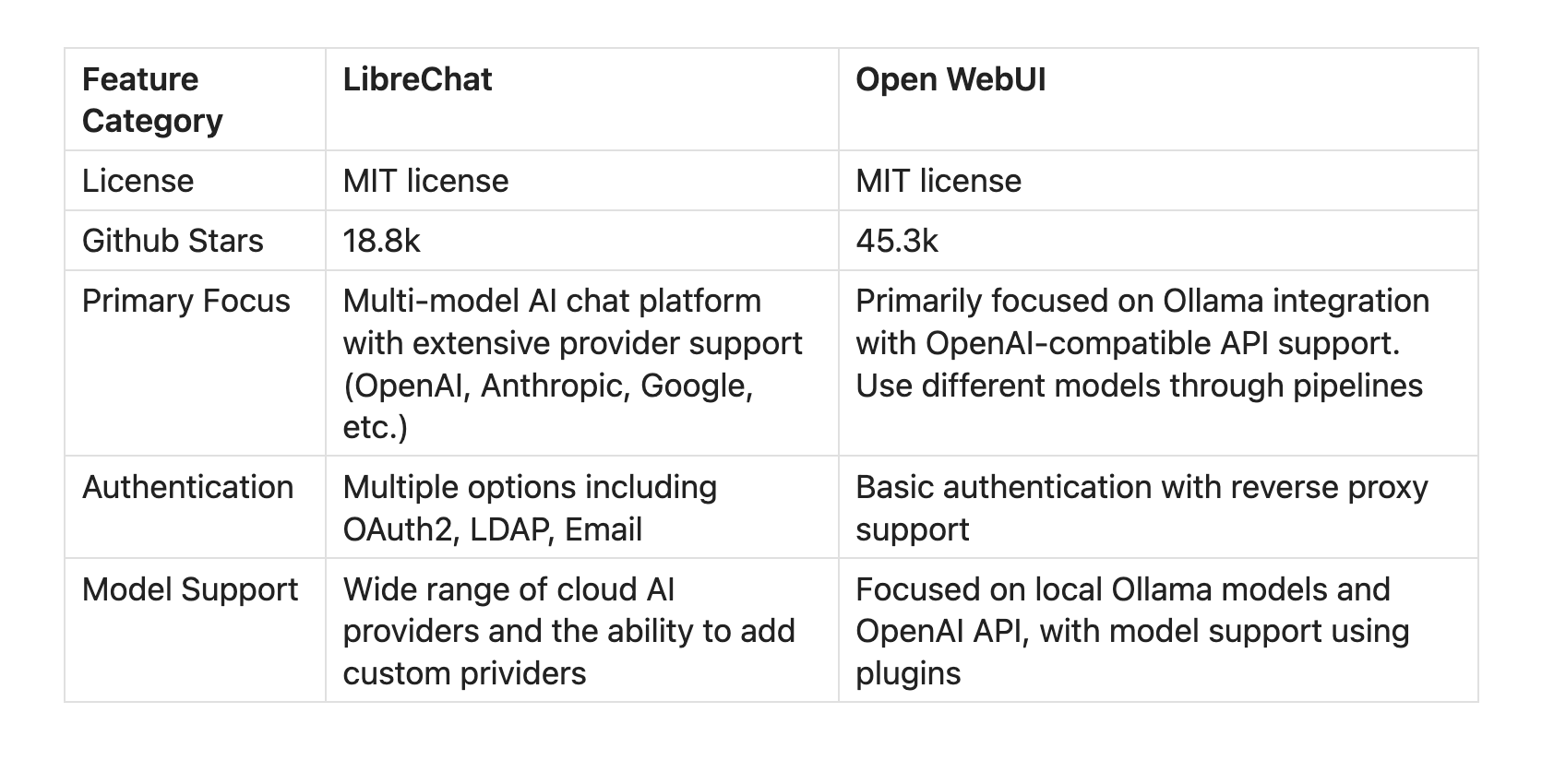

The Foundation: Two Different Philosophies

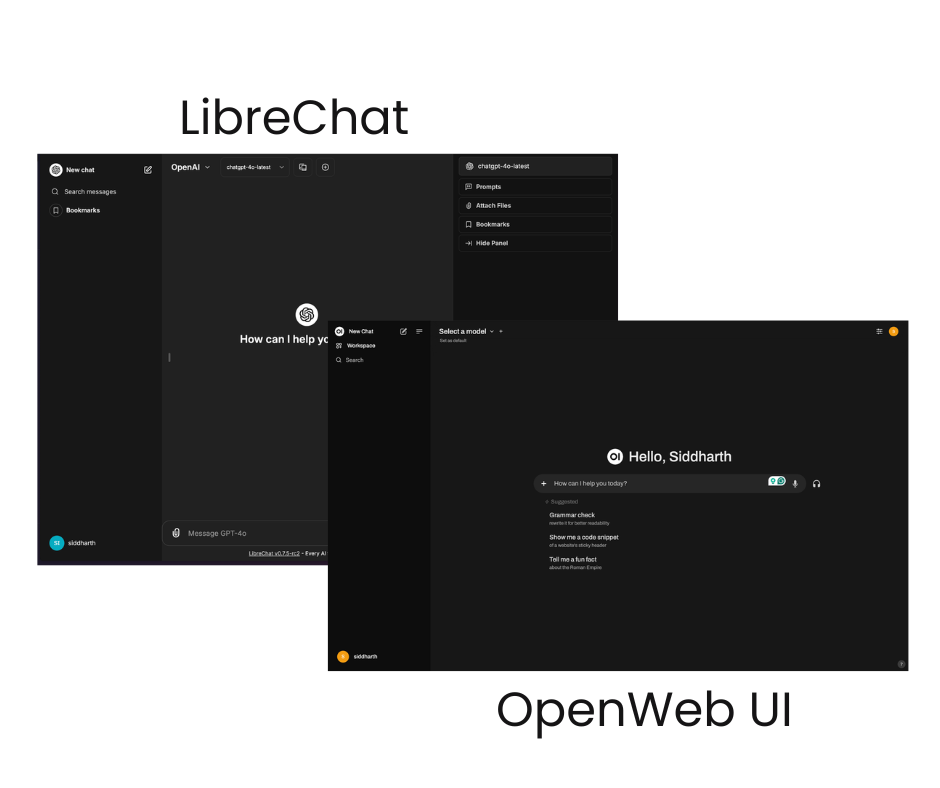

At their core, LibreChat and Open WebUI represent two distinct approaches to the same problem. LibreChat builds custom endpoints for different AI providers, offering a traditional integration approach that enterprises find familiar. Open WebUI, on the other hand, employs a pipeline architecture that lets you mix and match models and prompts with remarkable flexibility.

When integrated with Portkey's AI gateway, both platforms gain powerful reliability and governance features like conditional routing, fallbacks, retries, and load balancing, ensuring your AI systems remain responsive and resilient under any circumstances. For eg: When your primary model experiences latency spikes or outages, the system automatically routes requests to alternative providers, implements configurable retry policies, and maintains consistent performance through intelligent load balancing.

Also read: Adding enterprise-grade controls to LibreChat

Authentication & Governance:

LibreChat shines in enterprise environments with its comprehensive authentication system. It's built for complexity, supporting everything from Discord and GitHub OAuth to Azure AD and AWS Cognito. Its sophisticated automated moderation system tracks user behavior patterns and enforces temporary bans when needed.

Open WebUI takes a more streamlined approach. The first user automatically becomes a super admin, establishing a clear hierarchy. Through Role-Based Access Control and model whitelisting, administrators get precise control over resource access.

Making the Right Choice: Your choice between these platforms will largely depend on your organization's needs. Choose LibreChat when you need enterprise authentication flexibility and robust moderation for larger deployments. Opt for Open WebUI when streamlined user management and strong administrative control are priorities.

Portkey integration adds critical security infrastructure to both platforms. The system automatically detects and anonymizes PII, enforces configurable guardrails and maintains comprehensive audit logs of all AI interactions. Organizations can implement virtual API keys with predefined budget thresholds and usage constraints, enabling teams to work effectively while maintaining security and cost controls.

RAG and Web Search

Both platforms make AI smarter through Retrieval-Augmented Generation (RAG), but their approaches differ. LibreChat leverages LangChain and PostgreSQL with PGVector, creating an efficient document indexing system through Python FastAPI. Its native Google and Azure search plugins provide quick, context-aware responses.

Open WebUI offers a highly adaptable RAG setup that works with both local and remote sources. Users can load documents directly into chat workspaces or inject web search results from various providers. Its YouTube RAG pipeline for video content and hybrid search feature (combining BM25 and CrossEncoder) shows its commitment to comprehensive context understanding.

Portkey's semantic caching layer reduces redundant API calls by identifying similar questions and retrieving relevant cached responses, optimizing both cost and performance while maintaining response quality.

Model Support

Both platforms support a wide range of language models through OpenAI API compatibility. Open WebUI distinguishes itself with native Ollama integration, letting users create and manage Ollama models directly through its interface.

Portkey consolidates model management for both platforms. Organizations can access over 250 LLMs through a unified interface, whether using commercial APIs like OpenAI and Anthropic or running self-hosted models through Ollama, vLLM, or Triton. The integration handles model provisioning, prompt versioning, and routing, simplifying infrastructure management.

Chat Management

Open WebUI emphasizes organizational features with conversation tagging, chat cloning, and a robust memory system. Its standout features include RLHF annotation for feedback collection and community-sharing capabilities.

LibreChat takes a more fluid approach, focusing on conversational flexibility. Users can switch between AI endpoints mid-chat and fork conversations to explore different paths.

Both platforms support model presets and prompt capabilities, making them suitable for team environments. The choice comes down to your personal preference

Deployment: From Development to Production

LibreChat offers a broad deployment spectrum. Teams can start quickly with Docker containers, use direct npm installation for more control, or leverage Helm charts for Kubernetes orchestration. Its cloud deployment options span Digital Ocean, Hugging Face, and Railway, with smooth integration for Cloudflare, Nginx, and Traefik.

Open WebUI takes a container-centric approach, with optimized configurations for Docker, Docker Compose, and Podman. For Python enthusiasts, it offers native installation options through virtual environments. Enterprise users get robust Kubernetes support with both Helm and Kustomize options.

Making a choice: Choose LibreChat if you need flexibility in cloud deployment options and prefer a platform that works across multiple hosting scenarios. Opt for Open WebUI if you're committed to container technologies or require Python-native installation options.

Portkey's on-premises gateway ensures data sovereignty requirements are met while maintaining full platform functionality, keeping sensitive data within organizational boundaries

Community Support and Ecosystem

Both LibreChat and Open WebUI maintain active open-source communities, with Open WebUI particularly notable for its Pipeline initiative. These pipelines extend Tools and Functions capabilities, enabling:

- Custom workflow creation

- Dynamic prompt processing

- Real-time data integration

- Enhanced interaction patterns

Pipelines are a way to extend Open WebUIs native capabilities. They are generic Python scripts you can connect between your Open WebUI instance and the LLM in use.

Observability: Understanding Your AI Operations

The Portkey integration provides detailed visibility into AI operations for both platforms through a centralized dashboard tracking over 40 operational metrics. Teams can monitor:

- Cost allocation across departments and projects

- Response latencies and logs by model requests

- Token usage patterns and optimization opportunities

- User interaction patterns and feedback

- Custom metadata for detailed analysis

This observability helps organizations understand usage patterns, optimize costs, and continuously improve their AI implementations.

Making the Right Choice

The decision between LibreChat and Open WebUI depends on your organization's workflow patterns. Choose LibreChat when you need enterprise authentication flexibility and robust moderation for larger deployments. Opt for Open WebUI when streamlined user management and strong administrative control are priorities.

Both platforms, when integrated with Portkey's AI Gateway, provide the administrative, observability, and reliability features needed for production deployments. Whether you're building your first AI application or scaling existing workflows, this combination gives you the infrastructure to grow while maintaining control over your data and operations.

Learn how to integrate LibreChat and Open WebUI with Portkey.